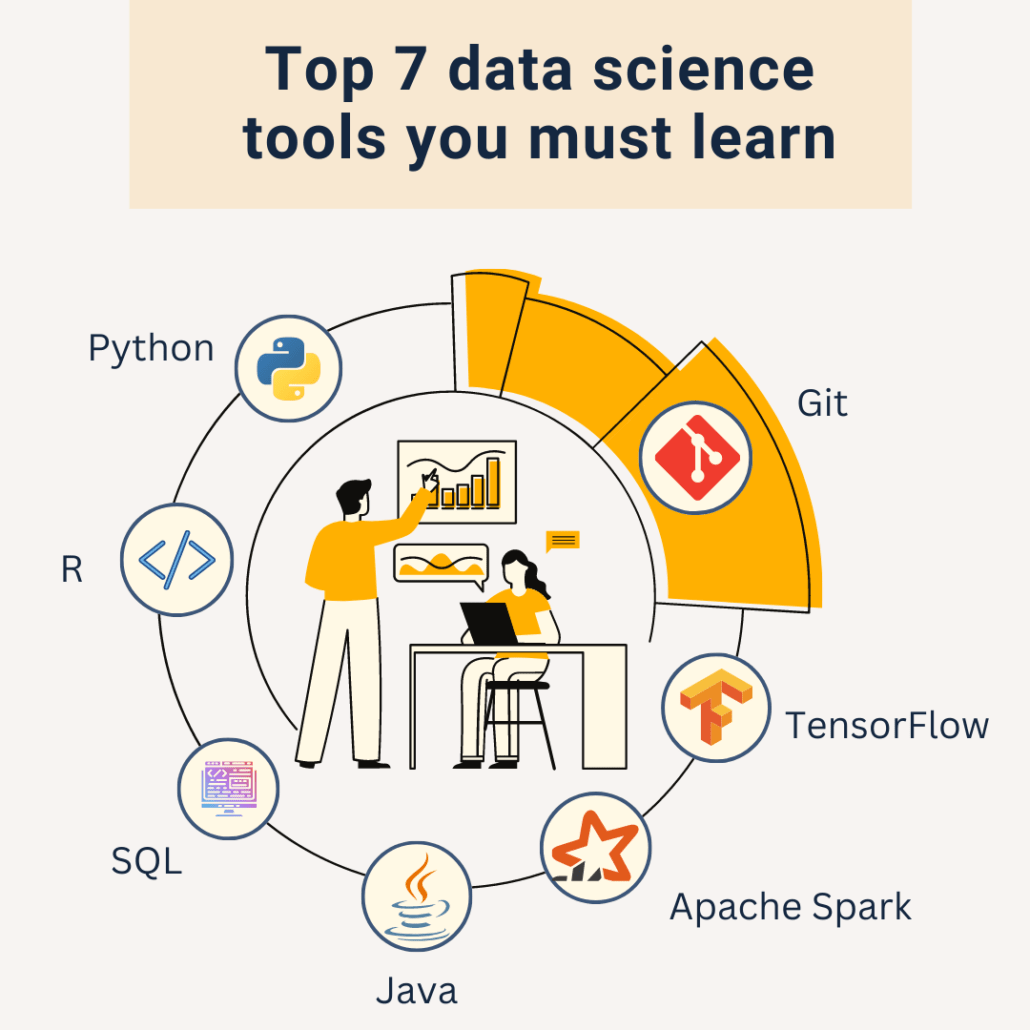

Data Science Tools: As the field of data science continues to evolve at a breakneck pace, many professionals remain focused on the big names: Python, R, TensorFlow, and Power BI. While these industry staples are indispensable, they often overshadow a host of powerful but underrated tools that can dramatically enhance your workflow, improve model performance, and help you deliver deeper insights.

Table of Contents

In 2025, with data science roles becoming more demanding and business expectations rising, having a broader toolkit can set you apart. This article will explore the lesser-known yet highly effective data science tools that every aspiring and seasoned data scientist should consider adopting this year.

Why Go Beyond the Popular Tools?

It’s easy to stick with what everyone else is using, but doing so can sometimes limit your potential. Here’s why exploring underrated tools makes sense:

- Enhanced Efficiency: Some niche tools are designed to automate repetitive tasks and streamline workflows.

- Improved Model Accuracy: Advanced packages offer cutting-edge algorithms that may outperform standard libraries.

- Better Collaboration: Tools with superior version control and sharing features can enhance teamwork.

- Unique Insights: Some platforms provide visualisation and analysis options not available in mainstream tools.

- Competitive Edge: Mastering uncommon tools can make your CV truly stand out in a crowded job market.

1. DVC (Data Version Control)

DVC is an open-source tool that brings version control to your machine learning projects, much like Git does for code. In data science, datasets and model files often change, and keeping track of these changes can be chaotic.

Why it’s underrated:

- Manages datasets, models, and experiments with ease.

- Integrates with existing Git workflows.

- Enables reproducibility, a critical requirement in data science projects.

For professionals managing multiple experiments, DVC offers a level of organisation that standard tools like Jupyter Notebooks cannot match.

2. Orange

Orange is a visual programming tool utilized for data analysis and machine learning. While Python and R require coding, Orange allows users to build workflows through a simple drag-and-drop interface.

Why it’s underrated:

- Ideal for rapid prototyping.

- Supports interactive data visualisation.

- Great for explaining models to non-technical stakeholders.

It’s particularly useful in collaborative settings where data scientists work with business users who may not have coding skills.

3. H2O.ai

H2O offers open-source platforms for machine learning and artificial intelligence that are remarkably powerful but often overshadowed by TensorFlow and Scikit-learn.

Why it’s underrated:

- Features AutoML capabilities for automatic model selection and tuning.

- Supports large-scale data and distributed computing.

- Integrates seamlessly with Python, R, and even Excel.

H2O’s AutoML has been gaining traction among enterprises looking to accelerate machine learning deployment without sacrificing accuracy.

4. KNIME (Konstanz Information Miner)

KNIME is a data analytics (Data Science Tools), reporting, and integration platform known for its modular data pipelining concept.

Why it’s underrated:

- Supports over 1,000 modules for everything from data cleaning to machine learning.

- Easily integrates with Python, R, and Spark.

- Excellent for building end-to-end data science workflows visually.

KNIME’s extensibility makes it a great choice for data scientists looking to create custom solutions without heavy coding.

5. Apache Superset

While Tableau and Power BI dominate the business intelligence space, Apache Superset is an open-source alternative that’s both powerful and flexible.

Why it’s underrated:

- Supports large datasets and real-time dashboards.

- Fully customisable with SQL and Python.

- No licensing costs, making it ideal for startups and SMEs.

Superset offers data scientists an alternative for visualising complex datasets while keeping control over custom features.

6. Optuna

Hyperparameter tuning is crucial for enhancing model performance, yet many practitioners stick to grid search or random search. Optuna is an automatic hyperparameter optimisation framework that uses state-of-the-art algorithms.

Why it’s underrated:

- More efficient than traditional tuning methods.

- Supports pruning of unpromising trials.

- Integrates with major machine learning libraries.

Optuna’s intelligent search algorithms help in achieving better models with less computational cost.

7. Great Expectations

Data validation often gets overlooked, but it is vital for building reliable models. Great Expectations is a tool that helps you document, validate, and profile your data pipelines.

Why it’s underrated:

- Automated data quality checks.

- Supports integration with Spark, SQL, and Pandas.

- Facilitates collaboration between data teams and business users.

With increasing scrutiny on data quality, mastering tools like Great Expectations can significantly boost your data pipeline’s credibility.

8. Databricks Notebooks

While Jupyter remains the standard for many, Databricks offers a cloud-based alternative that integrates with Spark and Delta Lake.

Why it’s underrated:

- Superior collaboration features.

- Handles big data more efficiently.

- Built-in version control and job scheduling.

For enterprise-scale projects, Databricks Notebooks provide robustness and scalability that traditional notebooks can struggle with.

Skills to Master These Tools

Learning these underrated tools requires a mix of foundational skills and specialised knowledge:

- Programming Proficiency: Python remains central, although tools like KNIME and Orange require less coding.

- Cloud Computing: Many modern data science tools are optimised for cloud environments like AWS, Azure, and GCP.

- Big Data Technologies: Familiarity with Spark, Hadoop, and distributed computing frameworks can be beneficial.

- Version Control: Knowing Git is essential for tools like DVC.

- Data Visualisation: Skills in creating dashboards and reports help in making results actionable.

For those seeking structured learning paths, enrolling in a data science course can provide exposure to these modern tools alongside the classics.

The Future of Data Science Tools

As we move deeper into 2025, several trends are shaping the true future landscape of data science tools:

- AutoML Domination: Tools that automate model building and tuning are on the rise.

- No-Code and Low-Code Solutions: Platforms like Orange and KNIME are making data science accessible to non-programmers.

- Data-Centric AI: Emphasis is shifting from model optimisation to improving data quality.

- Privacy-Preserving Tools: New frameworks are emerging to handle data securely, especially in healthcare and finance.

- Interoperability: Tools that easily integrate with existing ecosystems will see greater adoption.

Staying ahead in your career means not only mastering today’s tools but also keeping an eye on emerging ones that can make your work more impactful.

Practical Tips for Adopting New Tools

Venturing beyond familiar tools can seem daunting. Here are some practical tips:

- Start Small: Try using the tool on a pet project before deploying it in production.

- Leverage Community Support: Most open-source tools have vibrant user communities.

- Read Case Studies: Learning how other organisations have implemented the tool can provide valuable insights.

- Combine Tools: Many underrated tools integrate well with mainstream ones.

- Upskill Continuously: Consider formal training or workshops to accelerate your learning curve.

By gradually incorporating new tools into your workflow, you’ll not only expand your capabilities but also future-proof your career.

Conclusion: Diversify Your Data Science Toolkit

While mainstream tools like Python, TensorFlow, and Tableau will continue to dominate, the underrated tools discussed in this article can offer unique advantages that make your data science projects more efficient, accurate, and scalable. Whether it’s version control with DVC, hyperparameter tuning with Optuna, or data validation with Great Expectations, each tool fills a critical niche that general-purpose platforms often overlook.

For professionals considering advancing their careers through a data scientist course in Hyderabad, exposure to such tools is becoming increasingly valuable. Employers are looking for data scientists who not only know the basics but also bring versatile toolkits and innovative problem-solving approaches.

By staying curious and proactive about emerging tools, you’ll not only enhance your daily workflow but also position yourself as a forward-thinking expert in the competitive world of data science. As 2025 unfolds, make it the year you go beyond the obvious and embrace the underrated heroes of your craft.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744